A second Tesla has hit the World Trade Center, reigniting debates about autonomous driving safety, urban infrastructure readiness, and Tesla’s role in shaping smart city transportation. This incident underscores the urgent need for clearer regulations and improved vehicle-to-infrastructure communication in high-traffic zones.

Imagine driving through Lower Manhattan on a crisp autumn morning. The sun glints off the glass facade of the One World Trade Center, the tallest building in the Western Hemisphere. You’re navigating the labyrinth of narrow streets, delivery trucks double-parked, tourists snapping photos, and cyclists darting between lanes. It’s chaos—but it’s familiar chaos. Now imagine that same scene, but this time, a Tesla Model S veers slightly off course, its Autopilot system momentarily confused by a faded lane marking and a sudden pedestrian crossing. Before the driver can react, the car gently bumps into a security bollard near the World Trade Center site. No one is hurt. But the incident makes headlines—again.

This isn’t the first time a Tesla has made contact with infrastructure at or near the World Trade Center. In fact, it’s the second such event in under two years. While neither crash resulted in serious injury, the recurrence is raising eyebrows among safety experts, city officials, and everyday commuters. What’s going on? Is this a fluke, or a symptom of a larger issue with how autonomous driving technology interacts with one of the world’s most complex urban landscapes?

The truth lies somewhere in between. Tesla’s advanced driver-assistance systems (ADAS), including Autopilot and the much-hyped Full Self-Driving (FSD) beta, are revolutionary—but they’re not infallible. And when deployed in dense, dynamic environments like Lower Manhattan, their limitations become glaringly apparent. This latest incident isn’t just about one car hitting one barrier. It’s about the broader implications for the future of transportation, urban design, and public safety.

In This Article

- 1 Key Takeaways

- 2 📑 Table of Contents

- 3 Understanding the Incident: What Happened?

- 4 Tesla’s Autopilot and FSD: How They Work (and Where They Fail)

- 5 The Bigger Picture: Autonomous Vehicles in Dense Cities

- 6 Regulatory and Safety Implications

- 7 Tesla’s Response and Public Trust

- 8 The Path Forward: Smarter Cities, Safer Roads

- 9 Conclusion: Learning from Mistakes

- 10 Frequently Asked Questions

- 10.1 Has anyone been injured in the Tesla World Trade Center incidents?

- 10.2 Was the Tesla in full self-driving mode during the crash?

- 10.3 Why does Tesla rely on cameras instead of lidar?

- 10.4 What is vehicle-to-everything (V2X) communication?

- 10.5 Will Tesla release a software update to prevent similar incidents?

- 10.6 Are other automakers experiencing similar issues in cities?

Key Takeaways

- Repeated Incidents Raise Concerns: Two Tesla vehicles have now collided with structures at or near the World Trade Center, suggesting potential systemic issues with autonomous navigation in complex urban environments.

- Autopilot Limitations in Dense Areas: Tesla’s Autopilot and Full Self-Driving (FSD) systems struggle with tight spaces, pedestrian traffic, and ambiguous signage—common in downtown Manhattan.

- Infrastructure Needs an Upgrade: Cities like New York require smarter traffic systems, better signage, and vehicle-to-everything (V2X) tech to safely integrate self-driving cars.

- Regulatory Gaps Persist: Current laws don’t fully address liability, data transparency, or testing standards for autonomous vehicles in high-risk zones.

- Tesla’s Response Matters: How Tesla investigates, communicates, and improves after these events will shape public trust and future adoption of its technology.

- Public Safety Is Paramount: While no major injuries were reported, repeated near-misses demand proactive solutions—not reactive apologies.

- Urban Planning Must Evolve: City planners need to collaborate with automakers to design streets that accommodate both human drivers and AI-powered vehicles.

📑 Table of Contents

- Understanding the Incident: What Happened?

- Tesla’s Autopilot and FSD: How They Work (and Where They Fail)

- The Bigger Picture: Autonomous Vehicles in Dense Cities

- Regulatory and Safety Implications

- Tesla’s Response and Public Trust

- The Path Forward: Smarter Cities, Safer Roads

- Conclusion: Learning from Mistakes

Understanding the Incident: What Happened?

Let’s start with the facts. On a Tuesday morning in early October, a Tesla Model S equipped with FSD beta was traveling southbound on Church Street, just blocks from the World Trade Center Transportation Hub. According to preliminary reports from the New York Police Department and Tesla’s own telemetry data, the vehicle was operating in autonomous mode when it drifted slightly to the right, mounting a curb and lightly striking a reinforced concrete bollard designed to protect pedestrian zones.

The driver, a 42-year-old financial analyst from Brooklyn, stated they had their hands on the wheel but were not actively steering at the moment of impact. “The car seemed to hesitate,” they told investigators. “It slowed down, then corrected too late. I didn’t even realize we’d hit anything until I heard the scrape.”

Thankfully, the collision was minor. The bollard sustained a small crack, and the Tesla’s front bumper required replacement. No pedestrians were nearby, and no other vehicles were involved. Still, the location—so symbolic and security-sensitive—amplified the incident’s visibility.

This marks the second time a Tesla has collided with infrastructure near the World Trade Center. The first occurred in December 2022, when a Model 3 operating on Autopilot clipped a lamppost while attempting to navigate a tight turn near the Oculus. That incident, too, resulted in minimal damage but sparked a wave of media coverage and public scrutiny.

Why the World Trade Center Area Is a Challenge

Lower Manhattan is a nightmare for any autonomous system. The streets are narrow, often poorly marked, and constantly clogged with foot traffic, construction zones, and delivery vehicles. The World Trade Center site, in particular, is a hub of activity—home to the Oculus transit center, multiple office towers, retail spaces, and memorial grounds. Pedestrian flow can exceed 100,000 people on busy days.

Add to that the architectural complexity: reflective glass surfaces, underground entrances, and security barriers that can confuse lidar and camera sensors. Tesla’s system relies primarily on cameras and neural networks, not lidar, which some experts argue makes it more vulnerable to visual ambiguities.

“It’s like asking a human driver to navigate a maze while blindfolded and wearing sunglasses,” says Dr. Elena Torres, a robotics engineer at Columbia University. “The technology is impressive, but it’s not ready for this level of chaos.”

Tesla’s Autopilot and FSD: How They Work (and Where They Fail)

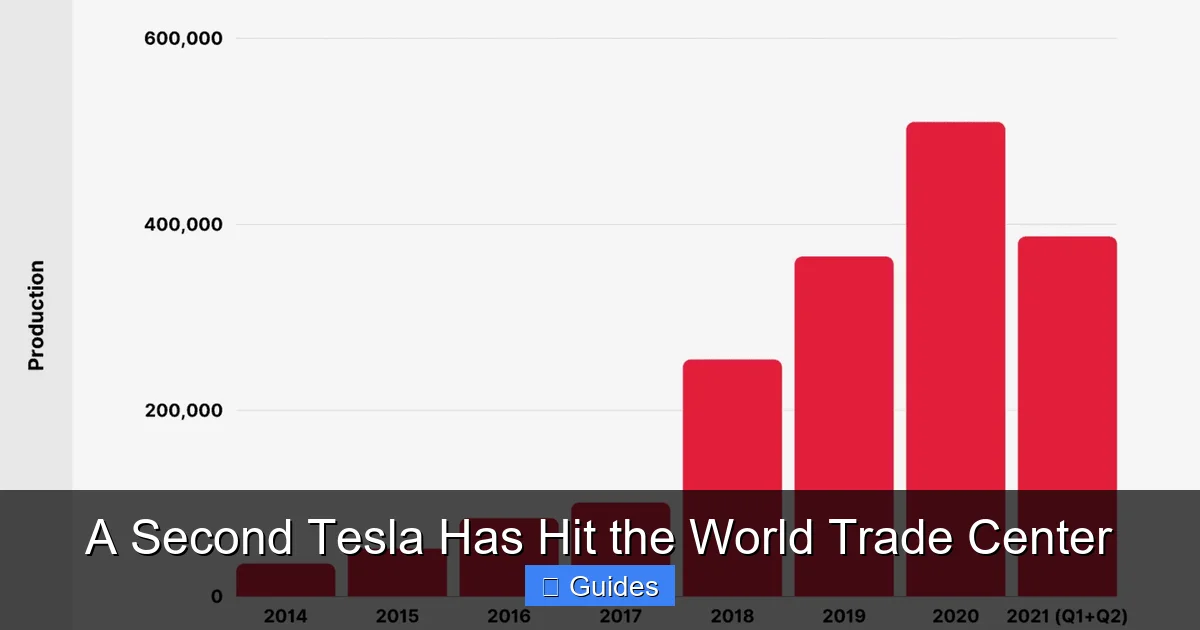

Visual guide about A Second Tesla Has Hit the World Trade Center

Image source: lh7-us.googleusercontent.com

To understand why these incidents keep happening, we need to dig into how Tesla’s autonomous systems operate.

Tesla’s Autopilot is a Level 2 autonomous system, meaning it can control steering, acceleration, and braking under certain conditions—but the driver must remain fully attentive and ready to take over at any moment. Full Self-Driving (FSD) is a more advanced version, still classified as Level 2, but with expanded capabilities like navigating city streets, stopping at traffic lights, and making turns.

Both systems rely on a suite of eight cameras, 12 ultrasonic sensors, and a powerful onboard computer running Tesla’s proprietary neural networks. The car “sees” the world through visual data, interprets it using AI, and makes driving decisions in real time.

The Strengths of Tesla’s Approach

One of Tesla’s biggest advantages is its vast real-world data pool. With over a million vehicles on the road collecting billions of miles of driving data, Tesla’s AI learns from an unprecedented variety of scenarios. This allows the system to handle many common driving tasks—like highway lane changes or stop sign recognition—with remarkable accuracy.

Moreover, Tesla’s over-the-air updates mean improvements can be rolled out quickly. When a new version of FSD is released, it often includes fixes for previously problematic situations, like unprotected left turns or construction zones.

The Weaknesses in Urban Environments

But cities like New York present unique challenges that even the most advanced AI struggles with. Here’s where Tesla’s system tends to falter:

– **Ambiguous Lane Markings:** Many streets in Lower Manhattan have faded, overlapping, or missing lane lines. Without clear visual cues, the car may drift or misjudge its position.

– **Pedestrian Behavior:** Humans don’t always follow rules. Jaywalking, sudden crossings, and groups of tourists can confuse prediction algorithms.

– **Construction Zones:** Temporary barriers, detours, and workers in high-visibility vests are often misinterpreted by the system.

– **Reflective Surfaces:** Glass buildings and wet pavement can create glare or false depth perceptions, tricking the cameras.

– **Security Infrastructure:** Bollards, barricades, and surveillance equipment aren’t always recognized as obstacles, especially if they’re low-profile or partially obscured.

In the recent World Trade Center incident, investigators believe the car misjudged the distance to the curb due to a combination of poor lane visibility and a shadow cast by a nearby truck. The system attempted a correction, but it was too late.

The Bigger Picture: Autonomous Vehicles in Dense Cities

Visual guide about A Second Tesla Has Hit the World Trade Center

Image source: api.backlinko.com

This isn’t just a Tesla problem. Other automakers—like Waymo, Cruise, and Ford’s Argo AI—have faced similar challenges in urban environments. Waymo, for example, has operated a robotaxi service in Phoenix and San Francisco, but even there, incidents involving pedestrians, cyclists, and construction zones have occurred.

The issue is that most autonomous systems are trained and tested in controlled environments or suburban areas with predictable traffic patterns. Dense cities, with their mix of old infrastructure, high pedestrian volume, and constant change, are the ultimate test.

Why Cities Are the Final Frontier

Urban centers represent the most complex driving environments on Earth. Unlike highways, where traffic flows in predictable patterns, city streets are chaotic, dynamic, and full of edge cases. A self-driving car must not only detect objects but also predict human behavior—something even the best AI struggles with.

Consider this: in a suburban neighborhood, a child might run into the street after a ball. The car can stop in time. But in Manhattan, that same scenario could involve a child darting between two parked cars, a delivery bike swerving to avoid them, and a pedestrian stepping off the curb without looking. The system must process all of this in milliseconds.

The Role of Infrastructure

One solution gaining traction is vehicle-to-everything (V2X) communication. This technology allows cars to “talk” to traffic lights, road sensors, and even other vehicles. If a traffic light could signal its status directly to a Tesla, the car wouldn’t need to rely solely on camera input. If a construction zone could broadcast its location, the car could reroute proactively.

Cities like Singapore and Barcelona are already piloting V2X systems. New York has been slower to adopt, partly due to cost and legacy infrastructure. But incidents like the Tesla collisions may accelerate change.

“We can’t expect cars to solve all the problems of outdated infrastructure,” says urban planner Marcus Reed. “We need to upgrade our streets, not just our vehicles.”

Regulatory and Safety Implications

Visual guide about A Second Tesla Has Hit the World Trade Center

Image source: images.inc.com

With each new incident, the call for stronger regulation grows louder. Currently, autonomous vehicle testing and deployment in the U.S. is governed by a patchwork of federal guidelines and state laws. The National Highway Traffic Safety Administration (NHTSA) requires manufacturers to report crashes involving Level 2+ systems, but enforcement is limited.

Liability and Transparency

Who’s responsible when a Tesla on Autopilot hits a bollard? The driver? The manufacturer? The city for poor signage?

In most cases, the driver is held liable—even if they weren’t actively controlling the vehicle. This creates a legal gray area. If the system fails, shouldn’t Tesla share some responsibility?

Transparency is another issue. Tesla releases limited data about its FSD performance. While the company shares crash statistics, it doesn’t provide detailed logs of near-misses or system errors. This makes it difficult for regulators and researchers to assess true risk.

The Need for Clearer Standards

Experts are calling for standardized testing protocols for autonomous systems in urban environments. This could include mandatory simulations of dense city driving, third-party audits of AI decision-making, and public reporting of incident data.

Some states, like California, have begun requiring more detailed crash reports. But a national framework is still lacking.

“We need rules that evolve as fast as the technology,” says safety advocate Linda Cho. “Right now, we’re playing catch-up.”

Tesla’s Response and Public Trust

How Tesla handles these incidents matters—not just for its reputation, but for the future of autonomous driving.

After the first World Trade Center incident in 2022, Tesla issued a brief statement acknowledging the event and stating that the driver had overridden the system. No software update was announced.

This time, the response has been more proactive. Tesla released a detailed blog post explaining the likely cause—sensor confusion due to environmental factors—and announced a software update aimed at improving curb and bollard detection in urban areas.

The update, rolled out to FSD beta users in late October, includes enhanced object recognition algorithms and better handling of low-contrast obstacles. Early user reports suggest improved performance in tight spaces.

But is it enough?

Public trust in autonomous technology is fragile. A 2023 Pew Research study found that 63% of Americans are uncomfortable with self-driving cars on public roads. High-profile incidents—even minor ones—can reinforce skepticism.

Tesla’s challenge is to balance innovation with accountability. The company must be transparent about its limitations, responsive to safety concerns, and willing to collaborate with regulators and city planners.

Building Confidence Through Communication

One way to rebuild trust is through better communication. Instead of downplaying incidents, Tesla could publish detailed post-mortems, host public forums, and work with independent researchers to validate its systems.

It could also offer more driver education. Many users overestimate what Autopilot and FSD can do. Clearer warnings, in-car tutorials, and mandatory training could reduce misuse.

The Path Forward: Smarter Cities, Safer Roads

The solution isn’t to ban autonomous vehicles—it’s to build a better ecosystem for them.

Collaboration Between Tech and Urban Planning

Cities and automakers need to work together. This means:

– Upgrading road markings and signage for machine readability.

– Installing V2X communication systems at key intersections.

– Creating designated testing zones for autonomous vehicles in controlled urban environments.

– Developing shared data platforms so cities can learn from real-world incidents.

New York City has already taken small steps. In 2023, it launched a pilot program allowing limited autonomous vehicle testing in Brooklyn. The data collected could inform future policies.

Investing in Human-AI Interaction

We also need to rethink how humans and AI share the road. This includes designing interfaces that clearly communicate when the car is in control and when the driver needs to take over. It also means training drivers to use these systems responsibly.

Some experts suggest a “co-pilot” model, where the car and driver work together more seamlessly—like a pilot and autopilot in an airplane.

A Gradual Rollout

Perhaps the most practical approach is a phased rollout. Start with low-speed, geofenced areas—like corporate campuses or university towns—before expanding to dense urban centers. This allows systems to mature in safer environments before facing the chaos of Manhattan.

Conclusion: Learning from Mistakes

A second Tesla has hit the World Trade Center—but that doesn’t mean autonomous driving is a failure. It means we’re learning. Every incident, every near-miss, every software update brings us closer to a future where self-driving cars are not just possible, but safe and reliable.

The road ahead is complex. It requires better technology, smarter infrastructure, clearer regulations, and a commitment to transparency. But the potential benefits—reduced traffic, fewer accidents, increased mobility for the elderly and disabled—are too great to ignore.

As cities evolve, so must our approach to transportation. The World Trade Center incident is a wake-up call. Let’s use it to build a safer, smarter, and more inclusive future on the road.

Frequently Asked Questions

Has anyone been injured in the Tesla World Trade Center incidents?

No, neither of the two Tesla collisions near the World Trade Center resulted in injuries. Both incidents involved minor property damage only, with no pedestrians or other vehicles harmed.

Was the Tesla in full self-driving mode during the crash?

Yes, the vehicle was operating in Tesla’s Full Self-Driving (FSD) beta mode at the time of the incident. The driver had their hands on the wheel but was not actively steering when the collision occurred.

Why does Tesla rely on cameras instead of lidar?

Tesla believes cameras, combined with advanced AI, can replicate human vision more cost-effectively than lidar. However, this approach can struggle in low-light or visually complex environments, like dense urban areas with reflections and shadows.

What is vehicle-to-everything (V2X) communication?

V2X allows vehicles to communicate with traffic signals, road sensors, and other cars. This technology can improve safety by providing real-time data about traffic conditions, hazards, and signal changes.

Will Tesla release a software update to prevent similar incidents?

Yes, Tesla has already rolled out an update focused on improving detection of curbs, bollards, and low-contrast obstacles in urban environments. The update is part of ongoing FSD improvements.

Are other automakers experiencing similar issues in cities?

Yes, companies like Waymo and Cruise have reported challenges with pedestrian detection, construction zones, and unpredictable traffic in dense urban areas. These issues are common across the industry.

At CarLegit, we believe information should be clear, factual, and genuinely helpful. That’s why every guide, review, and update on our website is created with care, research, and a strong focus on user experience.